Last year, ChatGPT was launched by OpenAI, and many Big Tech businesses followed suit to keep their competitive edge. The likes of Microsoft and Google have been at the forefront of integrating AI into their systems, such as building models like GPT-3 and Bard into their search engines. Doing so has meant users of AI-powered search engines receive contextual and human-like responses to their questions, capturing the attention of many, from students to executives.

But why is there such a following and need to integrate LLMs? This mostly comes down to models, including GPT-3, drawing the tech community in with their ability to copy human speech and writing patterns.

LLMs can pick up on the most minor subtleties in human speech as they are trained on an immense amount of data and track billions of parameters. This empowers LLMs to interpret colloquiums, subtexts and nuanced questions and, in return, give responses that make users have confidence in them.

Even though LLMs, such as GPT-3, can understand and turn out natural language, they can’t organically produce value for many organisations in their current state. LLMs’ expansive size and scale work against them, especially for businesses where factual accuracy is mission-critical. Including many online search queries.

LLMs aren’t best suited in fields where expert knowledge is a must. In fact, LLMs aren’t best placed in any revenue-generating role where factual accuracy is at the forefront. Leaving the questions of why this is the case and what implications this has on businesses that use or are looking to use AI unanswered.

That little problem of expansive scale

The factual inaccuracy of LLMs’ outputs comes down to the quality of data they are trained on to allow them to interpret and impersonate language, with the scope of this data being enormous.

At organisations such as OpenAI and Google, the training process of LLMs involves pulling together endless examples, in the millions, of text from all over the internet. Data includes every type of content available on the web across every topic imaginable.

The problem with training LLMs on this vast amount of data becomes apparent when using LLMs in specialist fields, even before area-specific jargon is posed to LLMs. Expert terms often have precise and distinct meanings in niche fields compared to their application in everyday speech or written form. This issue goes beyond model understanding one definition of specialist terminology, as different specialist fields may have contrasting relationships between the same concepts and terms.

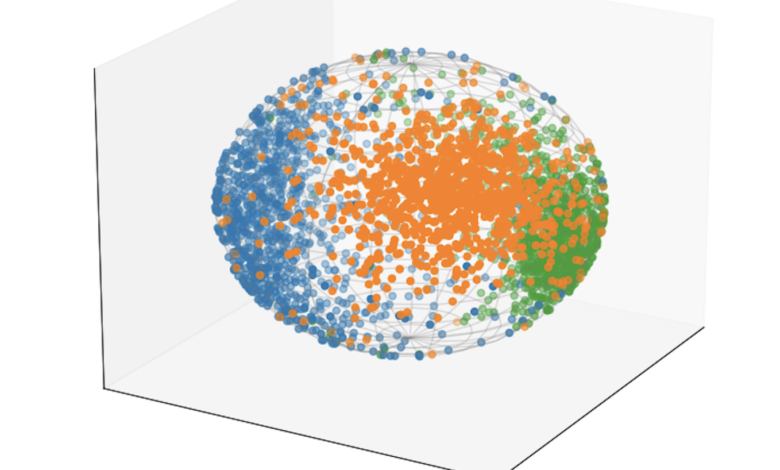

These issues are the leading cause of LLMs falsely analogising from a separate field, not understanding the questions it has been asked and getting definitions mixed up when given a query relating to a specialist area. Training LLMs on vast amounts of data give them a good ability to generalise and express themselves in plausible rich vocabulary. It also means they can provide generalised answers, comprehensive and convincing ones, but these same capabilities come with the drawback of not being good exact and to the point when needed. Yet, not all models are like this. Smarter models don’t compromise when it comes to exactness and find the balance between perfect plausible answers and absolute exactness.

Cumbersome fact-checking

Traditional search engines process queries made up of a string of keywords and produce a number of relevant results. These types of search engines browse through millions of websites and record links within the page and content to deliver the top results. Whereas LLMs for search generate only one search result, a text-based response with no citing of the information the response is founded upon

With the source remaining unknown, LLM users have no way of fact-checking results, which are already unreliable. For organisations where accuracy is critical they will need to validate results by using traditional search engines. Without sources listed, LLMs for search in their current state aren’t usable at scale as asking prompts and questions proves to be more time-consuming.

Smarter models might be the answer

LLMs responding to a specialist or niche knowledge request is a problem as they can’t handle these queries due to the data they are trained on. Leaving room for smarter models to be used in these specialist fields.

Smart language models are trained on smaller, curated, high-quality datasets and scientific content designed to focus on certain business fields or research areas. Additionally, factual accuracy is front and centre for smarter models. Instead of churning out inaccurate yet convincing results like LLMs, smarter models’ results have greater accuracy, along with citing the sources used to get to these results.

Even though LLMs’ human-like responses can fool people into thinking that they are accurate, there is no guarantee that they are. Adding to this challenge is the issue of LLMs not citing the sources their answers are founded on and the internet operating with links, ranks and ads, making the analysis LLMs and search engines rely on more complicated.

Smart language models offer pointers to LLMs on how to improve. Most importantly, prioritising accuracy and explanation is key to LLMs impacting the way we search and potentially disrupting traditional search engines.